03 February 2016

Don't use curl in Dockerfiles

01 November 2015

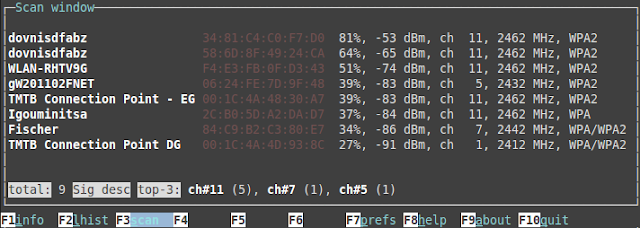

Fixing WiFi repeater issues on Ubuntu 15

sudo apt-get install wavemon && sudo wavemon)29 March 2015

Detailed ELB Latency Percentiles with Lambda

Lambda to the rescue

|

| Data Flow |

The Code

05 February 2014

Remove big files from Git repositories permanently

First you need to rewrite history:

git filter-branch --index-filter "git rm -r --cached --ignore-unmatch *.gem" --tag-name-filter cat -- --all

Note the –r and the use of wildcards inside the index-filter command. With the other options this means that all *.gem files in all commits and tags are found and removed. This command prints all objects its deleted. If it doesn't print anything useful you have made an error! Now delete the backup created by git filter-branch:

rd /q /s ".git/refs/original"

Some magic to get rid of orphaned objects inside the git repository:git reflog expire --expire=now --all

git gc --prune=now

Verify that all files are really gone with git log -- *.gem and then repack your repository.git gc --prune=now --aggressive

Finally, push your shrinked repository to the origin.git push origin --force

The next time you clone the repository you clone the repository you get the shrinked version.Update: But as soon as you do a git pull (--rebase) all the unneeded and painfully removed objects are downloaded again to your hard disk. The only way to prevent this is by deleting the repository on GitHub and replacing it with the shrinked one (without changing names or urls). Astonishingly, existing clones continued to work with the replaced repository.

Update2: On GitHub itself is now a nice article explaining the process of cleaning/shrinking repositories, including a link to a tool called BFG Repo Cleaner that is specialized for this task.

20 November 2012

Understand and Prevent Deadlocks

Can you explain a typical C# deadlock in a few words? Do you know the simple rules that help you to write deadlock free code? Yes? Then stop reading and do something more useful.

If several threads have read/write access to the same data it is often often necessary to limit access to only on thread. This can be done with C# lock statement. Only one thread can execute code that is protected by a lock statement and a lock object. It is important to understand that not the lock statement protects the code, but the object given as an argument to the lock statement. If you don't know how the lock statement works, please read the msdn documentation before continuing. Using a lock statement is better than directly using a Mutex or EventWaitHandle because it protects you from stale locks that can occur if you forget to release your lock when an exception happens.

A deadlock can occur only if you use more than one lock object and the locks are acquired by each thread in a different order. Look at the following sequence diagram:

There are two threads A and B and two resources X and Y. Each resource is protected by a lock object.

Thread A acquires a lock for Resource X and continues. Then Thread B acquires a lock for Y and continues. Now Thread A tries to acquire a lock for Y. But Y is already locked by Thread B. This means Thread A is blocked now and waits until Y is released. Meanwhile Thread B continues and now needs a lock for X. But X is already locked by Thread B. Now Thread A is waiting for Thread B and Thread B is waiting for Thread A both threads will wait forever. Deadlock!

The corresponding code could look like this.

But after re-factoring the getter for ResourceX to this

get { lock (X) return _resourceX ?? ResourceY; }

you have the same deadlock as in the first code sample!

Deadlock prevention rules

- Don't use static fields. Without static fields there is no need for locks.

- Don't reinvent the wheel. Use thread safe data structures from System.Collections.Concurrent or System.Threading.Interlocked before pouring lock statements over your code.

- A lock statement must be short in code and time. The lock should last nanoseconds not milliseconds.

- Don't call external code inside a lock block. Try to move this code outside the lock block. Only the manipulation of known private data should be protected. You don't know if external code contains locks now or in future (think of refactoring).

If you are following these rules you have a good chance to never introduce a deadlock in your whole career.

14 November 2012

How to find deadlocks in an ASP.NET application almost automatically

This is a quick how-to for finding deadlocks in an IIS/ASP.NET application running on a production server with .NET4 or .NET 4.5.

A deadlock bug inside your ASP.NET application is very ugly. And if it manifests only on some random production server of your web farm, maybe you feel like doom is immediately ahead. But with some simple tools you can catch and analyze those bugs.

These are the tools you need:

- ProcDump from SysInternals

- WinDbg from Microsoft (Available as part of the Windows SDK (here is even more info))

- sos.dll (part of the .NET framework)

- sosext from STEVE'S TECHSPOT (Copy it into your WinDbg binaries folder)

If you think a deadlock occurred do the following:

- Connect to the Server

- Open IIS Manager

- Open Worker Processes

- Select the application pool that is suspected to be deadlocked

- Verify that you indeed have a deadlock, see the screenshot below

- Notice the <Process-ID> (see screenshot)

- Create a dump with procdump <Process-ID> -ma

There are other tools, like Task Manager or Process Explorer, that could dump but only ProcDump is smart enough to create 32bit dumps for 32bit processes on a 64bit OS. - Copy the dump and any available .pdb (symbol) files to your developer machine.

- Depending on the bitness of your dump start either WinDbg (X86) or WinDbg (X64)

- Init the symbol path (File->Symbol File Path ...)

SRV*c:\temp\symbols*http://msdl.microsoft.com/download/symbols - File->Open Crash Dump

- Enter the following commands in the WinDbg Command Prompt and wait

- .loadby sos clr

- !load sosex

- !dlk

0:000> .loadby sos clr

0:000> !load sosex

This dump has no SOSEX heap index.

The heap index makes searching for references and roots much faster.

To create a heap index, run !bhi

0:000> !dlk

Examining SyncBlocks...

Scanning for ReaderWriterLock instances...

Scanning for holders of ReaderWriterLock locks...

Scanning for ReaderWriterLockSlim instances...

Scanning for holders of ReaderWriterLockSlim locks...

Examining CriticalSections...

Scanning for threads waiting on SyncBlocks...

*** WARNING: Unable to verify checksum for mscorlib.ni.dll

Scanning for threads waiting on ReaderWriterLock locks...

Scanning for threads waiting on ReaderWriterLocksSlim locks...

*** WARNING: Unable to verify checksum for System.Web.Mvc.ni.dll

*** ERROR: Module load completed but symbols could not be loaded for System.Web.Mvc.ni.dll

Scanning for threads waiting on CriticalSections...

*** WARNING: Unable to verify checksum for System.Web.ni.dll

*DEADLOCK DETECTED*

CLR thread 0x5 holds the lock on SyncBlock 0126fa70 OBJ:103a5878[System.Object]

...and is waiting for the lock on SyncBlock 0126fb0c OBJ:103a58d0[System.Object]

CLR thread 0xa holds the lock on SyncBlock 0126fb0c OBJ:103a58d0[System.Object]

...and is waiting for the lock on SyncBlock 0126fa70 OBJ:103a5878[System.Object]

CLR Thread 0x5 is waiting at System.Threading.Monitor.Enter(System.Object, Boolean ByRef)(+0x17 Native)

CLR Thread 0xa is waiting at System.Threading.Monitor.Enter(System.Object, Boolean ByRef)(+0x17 Native)

1 deadlock detected.

Now you know that the managed threads 0x5 and 0xa are waiting on each other. With the !threads command you get a list of all threads. The Id Column (in decimal) is the managed thread id. To the left the WinDbg number is written. With ~[5]e!clrstack command you can see the stacktrace of CLR thread 0x5. Or just use ~e*!clrstack to see all stacktraces. With this information you should immediately see the reason for the deadlock and start fixing the problem..

|

| Deadlocked Requests visible in IIS Worker Process |

Automate the Deadlock Detection

If you are smart, create a little script that automates step 2 to 7. We use this powershell script for checking every minute for a deadlock situation:param($elapsedTimeThreshold, $requestCountThreshold)

Import-Module WebAd*

$i = 1

$appPools = Get-Item IIS:\AppPools\*

while ($i -le 5) {

ForEach ($appPool in $appPools){

$count = ($appPool | Get-WebRequest | ? { $_.timeElapsed -gt $elapsedTimeThreshold }).count

if($count -gt $requestCountThreshold){

$id = dir IIS:\AppPools\$($appPool.Name)\WorkerProcesses\ | Select-Object -expand processId

$filename = "id_" +$id +".dmp"

$options = "-ma"

$allArgs = @($options,$id, $filename)

procdump.exe $allArgs

}

}

Start-Sleep -s 60

}

07 November 2012

Cooperative Thread Abort in .NET

Did you know that .NET uses a cooperative thread abort mechanism?

As someone coming from a C++ background I always thought that killing a thread is bad behavior and should be prevented at all costs. When you terminate a Win32 thread it can interrupt the thread in any state at every machine instruction so it may leave corrupted data structures behind.

A .NET thread cannot be terminated. Instead you can abort it. This is not just a naming issue, it is indeed a different behavior. If you call Abort() on a thread it will throw a ThreadAbortException in the aborted thread. All active catch and finally blocks will be executed before the thread gets terminated eventually. In theory, this allows the thread to do a proper cleanup. In reality this works only if every line of code is programmed in a way that it can handle a ThreadAbortException

in a proper way. And the first time you call 3rd-party code not under your control you are doomed.

Too make the situation more complex there are situations where throwing the ThreadAbortException is delayed. In versions prior to .NET 4.5 this was poorly documented but in the brand new documentation of the Thread.Abort method is very explicit about this. A thread cannot be aborted inside a catch or finally block or a static constructor (and probably not the initialization of static fields also) or any other constrained execution region.

Why is this important to you?

Well, if you are working in an ASP.NET/IIS environment the framework itself will call Abort() on threads which are executing too long. In this way the IIS can heal itself if some requests hit bad blocking code like endless loops, deadlocks or waiting on external requests. But if you were unlucky enough to implement your blocking code inside static constructors, catch or finally blocks your requests will hang forever in your worker process. It will look like the httpRuntime executionTimeout is not working and only a iisreset will cure the situation.

Download the CooperativeThreadAbortDemo sample application.

27 September 2012

Embed Url Links in TeamCity Build Logs

But it seams that there is no way to include links in TeamCity build logs. TeamCity correctly escapes all the output from test and build tools so it is not possible to get some html into the log. So I investigated TeamCity extension points. Writing a complete custom html report seemed to be overkill because the test reporting tab worked really well. Writing a UI plugin means to learn Java, JSP and so on and as a .NET company we don't want to fumble around with the Java technology stack. But as a web company we know how to hack javascript ;-)

There is already a TeamCity plugin called StaticUIExtensions that allows you to embedd static html fragments in TeamCity pages. And because a script tag is a valid html fragment we could inject javascript into TeamCity. So I wrote a few lines of javascript that scan the dom for urls and transforms them into links. With this technique you get clickable links in all the build logs.

What you need to do:

- Install StaticUIExtensions

- On your TeamCity server, open your "server\config\_static_ui_extensions" folder

- Open static-ui-extensions.xml

- Add a new rule that inserts "show-link.html" into every page that starts with "viewLog.html"

<rule html-file="show-link.html" place-id="BEFORE_CONTENT"> <url starts="viewLog.html" /> </rule> - Create a new file "show-link.html" with this content:

<script> (function ($) { var regex = /url\((.*)\)/g function createLinksFromUrls() { $("div .fullStacktrace, div .msg").each(function () { var div = $(this); var oldHtml = div.html(); var newHtml = oldHtml.replace(regex, "<a href='$1' target='_blank'>$1</a>"); if (oldHtml !== newHtml) div.html(newHtml); }); } $(document).ready(createLinksFromUrls); $(document).click(function () { window.setTimeout(createLinksFromUrls, 50); window.setTimeout(createLinksFromUrls, 100); window.setTimeout(createLinksFromUrls, 500); }); })(window.jQuery);

</script>

Voila, Mission completed.

10 August 2012

"Right" vs "Simple"

MIT

- Simplicity

- The design must be simple, both in implementation and interface. It is more important for the interface to be simple than the implementation.

- Correctness

- Correctness-the design must be correct in all observable aspects. Incorrectness is simply not allowed.

- Consistency

- The design must not be inconsistent. A design is allowed to be slightly less simple and less complete to avoid inconsistency. Consistency is as important as correctness.

- Completeness

- The design must cover as many important situations as is practical. All reasonably expected cases must be covered. Simplicity is not allowed to overly reduce completeness.

New Jersey

- Simplicity

- The design must be simple, both in implementation and interface. It is more important for the implementation to be simple than the interface. Simplicity is the most important consideration in a design.

- Correctness

- The design must be correct in all observable aspects. It is slightly better to be simple than correct.

- Consistency

- The design must not be overly inconsistent. Consistency can be sacrificed for simplicity in some cases, but it is better to drop those parts of the design that deal with less common circumstances than to introduce either implementational complexity or inconsistency.

- Completeness

- The design must cover as many important situations as is practical. All reasonably expected cases should be covered. Completeness can be sacrificed in favor of any other quality. In fact, completeness must sacrificed whenever implementation simplicity is jeopardized. Consistency can be sacrificed to achieve completeness if simplicity is retained; especially worthless is consistency of interface.

29 March 2012

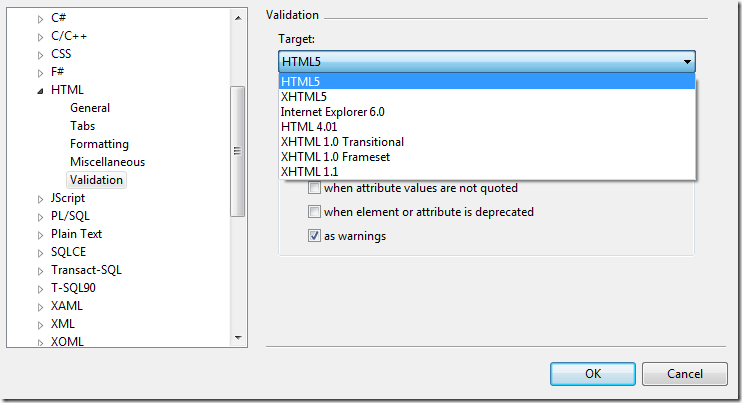

HTML5 without warnings in Visual Studio

Today I was annoyed about warnings that Visual Studio shows when editing an html5 file. Example: VS expects a type attribute inside the script tag but html5 doesn't require it anymore (because it defaults to javascript).

When opening the context menu I noticed the "Formatting and Validation" item and opened it:

Choosing "HTML5" as a target removes all those annoying wrong warnings :-)

27 November 2011

Speed up your build with UseHardlinksIfPossible

MsBuild 4.0 added the new attribute "UseHardlinksIfPossible" to the Copy task. Using Hardlinks makes your build faster because less IO operations and disk space is needed (= better usage of file system cache). What's best is that this new option is already be used by the standard .net build system! But Microsoft decided to turn them off by default.

After searching a little bit in the c# target files I found out how to turn this feature globally on and my build was 20% faster than before. And if you have big builds with more than a hundred projects this counts!

So here comes the way to turn on hard linking in your build. First, this works only with NTFS. Second you have to explicitly set the ToolsVersion to 4.0. You can do this with a command line argument (msbuild /tv:4.0) or inside the project file (<Project DefaultTargets="Build" ToolsVersion="4.0" ...).

Then you have to override the following properties with a value of "True":

- CreateHardLinksForCopyFilesToOutputDirectoryIfPossible

- CreateHardLinksForCopyAdditionalFilesIfPossible

- CreateHardLinksForCopyLocalIfPossible

- CreateHardLinksForPublishFilesIfPossible

Use command line properties (msbuild /p:CreateHardLinksForCopyLocalIfPossible=true) to override them for all projects in one build. Or you can create a little startup build file that collects all projects and set the properties in one place. Here is mine:

<Project DefaultTargets="Build" ToolsVersion="4.0" xmlns="http://schemas.microsoft.com/developer/msbuild/2003" >

<PropertyGroup>

<Include>**\*.csproj</Include>

<Exclude>_build\**\*.csproj</Exclude>

<CreateHardLinksIfPossible>true</CreateHardLinksIfPossible>

</PropertyGroup>

<ItemGroup>

<ProjectFiles Include="$(Include)" Exclude="$(Exclude)"/>

</ItemGroup>

<Target Name="Build" >

<MSBuild Projects="@(ProjectFiles)" Targets="Build" BuildInParallel="True" ToolsVersion="4.0"

Properties="Configuration=$(Configuration);

BuildInParallel=True;

CreateHardLinksForCopyFilesToOutputDirectoryIfPossible=$(CreateHardLinksIfPossible);

CreateHardLinksForCopyAdditionalFilesIfPossible=$(CreateHardLinksIfPossible);

CreateHardLinksForCopyLocalIfPossible=$(CreateHardLinksIfPossible);

CreateHardLinksForPublishFilesIfPossible=$(CreateHardLinksIfPossible);

" />

</Target>

</Project>

26 June 2011

Exception Logging Antipatterns

Here are some logging antipatterns I have seen again and again in real life production code. If your application has one global exception handler, catching and logging should be done only in this central place. If you want to provide additional information throw a new exception and attach the original exception. I assume that the logging framework is capable of dumping an exception recursively, that means with all inner exceptions and their stacktraces.

Catch Log Throw

{

_logger.WriteError(ex);

throw;

}

No additionally info is added. The global exception handler will log this error anyway, therefore the logging is redundant and blows up your log. The correct solution is to not catch the exception at all.

Catch Log Throw Other

{

_logger.WriteError(ex, "information");

throw new InvalidOperationException("information"); // same information

}

throw new InvalidOperationException("information", ex);

Log Un-thrown Exceptions

{

var myException = new MyException("information");

_logger.WriteError(myException);

throw myException;

}

In this case an un-thrown exception is logged. This could cause problems, because the exception is not fully initialized until it was thrown. For example the Stacktrace property would be null. Solution: don't log, just attach the original exception ex to MyException:

throw new MyException("information", ex);

Non Atomic Logging

{

_logger.WriteError(ex.Message);

_logger.WriteError("Some information");

_logger.WriteError(ex);

_logger.WriteError("More information");

}

Several log messages are created for one cause. In the log they appear unrelated and can be mixed with other log message. Solution: Combine the information into one atomic write to the logging system: _logger.WriteError(ex, "Some information and more information");

Expensive Log Messages

[...] // some code

_logger.WriteInformation(Helper.ReflectAllProperties(this));

}

if (_logger.ShouldWrite(LogLevel.Information)) { // do expensive logging here_logger.WriteInformation(Helper.ReflectAllProperties(this));

}

13 June 2011

Disable ASP.NET Development Server

- Select the web or WCF project. Press F4 to show the property window. If only an empty window appears, repeat the process.

- Set the first property to "False".

If your solution contain projects that start the ASP.NET Development Server you will enjoy my macro that sets this property solution wide:

REM The dynamic property CSharpProjects returns all projects

REM recursively. "Solution.Projects" would return only the top

REM level projects. Use VBProjects if you are using VB :-).

Dim projects = CType(DTE.GetObject("CSharpProjects"), Projects)

For Each p As Project In projects

For Each prop In p.Properties

If prop.Name = "WebApplication.StartWebServerOnDebug" Then

prop.Value = False

End If

Next

Next

End Sub

Update: This solution no longer works for VS2012. An addin with the same functionality is available on GitHub: https://github.com/algra/VSTools

16 February 2011

Visual Studio 2010 Javascript Snippets for Jasmine

Because Resharper 5 does not support live templates for Javascript I’m forced to use the built in VS2010 snippets. The default Javascripts snippets are located here:

%ProgramFiles%\Microsoft Visual Studio 10.0\Web\Snippets\JScript\1033\JScript

The ‘1033’ locale ID may be different for your country. I’m using the following snippets for creating Jasmine specs:

describe

<CodeSnippet Format="1.1.0" xmlns="http://schemas.microsoft.com/VisualStudio/2005/CodeSnippet">

<Header>

<Title>describe</Title>

<Author>Christian Rodemeyer</Author>

<Shortcut>describe</Shortcut>

<Description>Code snippet for a jasmine 'describe' function</Description>

<SnippetTypes>

<SnippetType>Expansion</SnippetType>

</SnippetTypes>

</Header>

<Snippet>

<Declarations>

<Literal>

<ID>suite</ID>

<ToolTip>suite description</ToolTip>

<Default>some suite</Default>

</Literal>

</Declarations>

<Code Language="jscript"><![CDATA[describe("$suite$", function () {

$end$

});]]></Code>

</Snippet>

</CodeSnippet>

it

<CodeSnippet Format="1.1.0" xmlns="http://schemas.microsoft.com/VisualStudio/2005/CodeSnippet">

<Header>

<Title>it</Title>

<Author>Christian Rodemeyer</Author>

<Shortcut>it</Shortcut>

<Description>Code snippet for a jasmine 'it' function</Description>

<SnippetTypes>

<SnippetType>Expansion</SnippetType>

</SnippetTypes>

</Header>

<Snippet>

<Declarations>

<Literal>

<ID>spec</ID>

<ToolTip>spec description</ToolTip>

<Default>expected result</Default>

</Literal>

</Declarations>

<Code Language="jscript"><![CDATA[it("should be $spec$", function () {

var result = $end$

});]]></Code>

</Snippet>

</CodeSnippet>

func

<CodeSnippet Format="1.1.0" xmlns="http://schemas.microsoft.com/VisualStudio/2005/CodeSnippet">

<Header>

<Title>function</Title>

<Author>Christian Rodemeyer</Author>

<Shortcut>func</Shortcut>

<Description>Code snippet for an anonymous function</Description>

<SnippetTypes>

<SnippetType>Expansion</SnippetType>

<SnippetType>SurroundsWith</SnippetType>

</SnippetTypes>

</Header>

<Snippet>

<Code Language="jscript"><![CDATA[function () {

$selected$$end$

}]]></Code>

</Snippet>

</CodeSnippet>

10 February 2011

Removing the mime-type of files in Subversion with SvnQuery

If you add files to subversion they are associated with a mimetype. SvnQuery will only index text files, that means files without an svn:mime-type property or where the property is set to something like “text/*”. At work I wondered why I couldn’t find some words that I know must exist. It turned out that subversion marks files stored as UTF-8 files with BOM as binary, using svn:mime-type application/octet-stream. This forces the indexer to ignore the content of the file.

I used SvnQuery to find all files that are marked as binary, e.g. t:app* .js finds all javascript files and t:app* .cs finds all C# files. With the download button at the bottom of the results page I downloaded a text files with the results. Because svn propdel svn:mime-type [PATH] can work only on one file (it has no --targets option) I had to modify the text file to create a small batch script like this:

svn propdel svn:mime-type c:\workspaces\javascript\file1.js

svn propdel svn:mime-type c:\workspaces\javascript\file1.js

svn propdel svn:mime-type c:\workspaces\javascript\file1.js

…

After this change indexing worked again. I now run a daily query that ensures that no sources files are marked as binary.